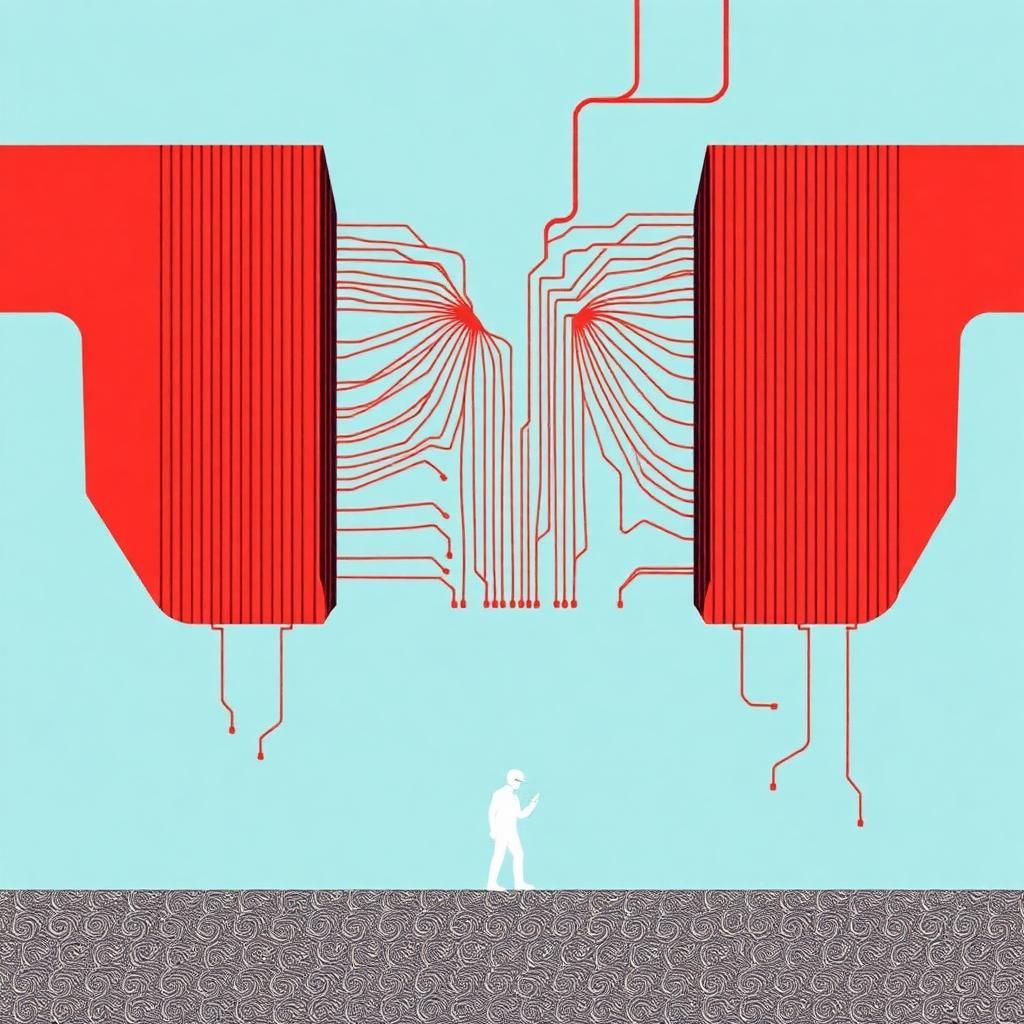

In today’s digital age, the way we receive and process information is fundamentally shaped by algorithms. These complex mathematical formulas curate the content we see on social media platforms, search engines, and news apps. While this personalized approach can enhance our online experience, it also raises critical concerns about how algorithms influence our beliefs and worldviews. This phenomenon, sometimes called the “algorithm prison,” traps users within echo chambers and filter bubbles, reinforcing pre-existing opinions and limiting exposure to diverse perspectives. In this article, we will explore how your feed shapes your beliefs by examining the role of algorithms in content curation, the psychological implications of algorithm-driven feeds, and the societal consequences of living inside algorithmic bubbles.

How Algorithms Curate Your Feed

At the heart of every social media platform, search engine, and news aggregator is an algorithm designed to tailor content to individual preferences. These algorithms analyze a vast array of data points, including your past interactions, likes, shares, clicks, watch time, and even your network connections. Based on this data, they predict what content you are most likely to engage with and prioritize it in your feed.

For example, if you frequently like or comment on posts about a particular political ideology or hobby, the algorithm will deliver more similar content, reinforcing your interests and beliefs. This creates a feedback loop where your feed becomes increasingly homogeneous, showing you more of what you already agree with while filtering out contradictory viewpoints. The goal is to maximize user engagement—more time spent on the platform often translates into higher ad revenue.

However, this personalization comes at a cost. By filtering content through your previous behaviors, algorithms create a narrow information environment. Users often unknowingly become confined within “filter bubbles,” where their exposure to diverse opinions and facts is limited. This raises questions about the objectivity and openness of the information we consume daily.

Psychological Implications: Confirmation Bias and Cognitive Anchoring

The algorithm prison doesn’t just restrict what you see—it also exploits innate psychological tendencies such as confirmation bias and cognitive anchoring. Confirmation bias is the human propensity to seek, interpret, and remember information that confirms pre-existing beliefs while ignoring contradictory evidence. Algorithms exploit this by consistently feeding content aligned with your views, reinforcing your sense of correctness and trust in those beliefs.

Over time, this can lead to cognitive anchoring, where your initial beliefs become the reference point against which you evaluate all new information. When your feed continuously reinforces certain narratives, it strengthens these mental anchors, making it more difficult to question or reconsider your viewpoints.

Moreover, the reward mechanisms built into social media—likes, shares, positive comments—can create dopamine-driven feedback loops. This neurochemical response encourages users to seek more content that aligns with their beliefs and identity, further deepening the algorithmic echo chamber.

The result is a self-reinforcing cycle: the algorithm shows you content that confirms your beliefs, your brain responds positively to this confirmation, and you engage more with similar content, prompting the algorithm to deliver yet more of the same. This cycle can polarize opinions, reduce critical thinking, and make users more susceptible to misinformation and propaganda.

Societal Impact: Polarization, Misinformation, and Democratic Discourse

The algorithm prison extends beyond individual users, impacting society at large. By shaping the beliefs of millions, algorithm-driven feeds have contributed to increased political polarization and social fragmentation. Echo chambers amplify extreme viewpoints while muffling moderate or dissenting voices, making it harder for communities to find common ground.

Misinformation and fake news also thrive in this environment. Algorithms often prioritize engagement over accuracy, so sensational or emotionally charged content—regardless of its truthfulness—tends to perform better. When users are constantly exposed to misleading or false information that aligns with their biases, it becomes difficult to distinguish fact from fiction. This undermines public trust in media and institutions.

Furthermore, the narrowing of perspectives hampers democratic discourse. Healthy democracies rely on informed citizens who can critically evaluate diverse viewpoints and engage in respectful debate. The algorithm prison, by reinforcing division and limiting exposure to different ideas, threatens this foundation. It can lead to social unrest, decreased civic participation, and challenges in addressing complex societal issues that require collaborative solutions.

Breaking Free from the Algorithm Prison

While algorithms are designed to keep you engaged, there are steps you can take to regain control over your information diet and beliefs:

1. Seek Diverse Sources: Intentionally follow a variety of news outlets, opinion leaders, and communities that offer different perspectives. This helps break the filter bubble and exposes you to a broader range of ideas.

2. Use Algorithm Alternatives: Consider platforms or apps that emphasize chronological feeds or user control over content discovery rather than algorithmic curation.

3. Practice Critical Thinking: Develop habits of skepticism and fact-checking. Question the sources of information and be cautious of sensational headlines or emotionally charged content.

4. Limit Social Media Time: Reducing the time spent on algorithm-driven platforms can minimize exposure to echo chambers and reduce the addictive cycle of confirmation.

5. Engage in Offline Discussions: Real-world conversations with diverse groups can challenge your assumptions and provide richer context than online feeds.

Conclusion

The algorithm prison is a powerful and pervasive force shaping how we perceive the world, reinforcing our beliefs and limiting our intellectual horizons. While algorithms enhance convenience and personalization, they also risk creating closed loops that hinder open-mindedness and critical thinking. Understanding how these digital mechanisms work and their psychological and societal impacts is essential for navigating today’s information landscape. By consciously diversifying the content we consume and fostering healthier media habits, we can break free from algorithmic constraints and cultivate more balanced, informed beliefs.